Welcome to the first edition of FRCSyn, the Face Recognition Challenge in the Era of Synthetic Data organized at WACV 2024.

To promote and advance the use of synthetic data for face recognition, we organize the first edition of Face Recognition Challenge in the Era of Synthetic Data (FRCSyn). This challenge intends to explore the application of synthetic data to the field of face recognition in order to find solutions to the current limitations existed in the technology, for example, in terms of privacy concerns associated with real data, bias in demographic groups (e.g., ethnicity and gender), and lack of performance in challenging conditions such as large age gaps between enrolment and testing, pose variations, occlusions etc.

This challenge intends to provide an in-depth analysis of the following research questions:

- What are the limits of face recognition technology trained only with synthetic data?

- Can the use of synthetic data be beneficial to reduce the current limitations existed in face recognition technology?

FRCSyn challenge will analyze improvements achieved using synthetic data and the state-of-the-art face recognition technology in realistic scenarios, providing valuable contributions to advance the field.

FRCSyn Challenge: Summary Paper

📝 The summary paper of the FRCSyn Challenge is available here.

News

- 30 Nov 2023 Schedule for the Workshop is available

- 20 Nov 2023 Summary paper available

- 30 Oct 2023 FRCSyn Challenge ends

- 10 Oct 2023 Deadline extended to 30 October

- 13 Sep 2023 FRCSyn Challenge starts

- 10 Sep 2023 Website is live!

Schedule, 8th January 2024, Morning

| Time (HST) | Duration | Activity |

|---|---|---|

| 8:20 – 8:30 | 10 mins | Introduction |

| 8:30 – 9:15 | 45 min | Keynote 1: Koki Nagano |

| 9:15 – 10:00 | 45 min | Keynote 2: Fernando De la Torre |

| 10:00 – 10:15 | 15 min | 1st Break |

| 10:15 – 10:35 | 20 min | FRCSyn Challenge |

| 10:35 – 10:45 | 10 min | FRCSyn Challenge: Q&A |

| 10:45 – 11:20 | 35 min | Top-ranked Teams (5) |

| 11:20 – 11:35 | 15 min | Notable Teams (3) |

| 11:35 – 11:50 | 15 min | 2nd Break |

| 11:50 – 12:35 | 45 min | Keynote 3: Xiaoming Liu |

| 12:35 – 12:45 | 10 min | Closing Notes |

Keynote Speakers

|

Title: In the Era of Synthetic Avatars: From Training to Verification Abstract: We are witnessing a rise in generative AI technologies that make the creation of digital avatars automated and accessible to anyone. While it is clear that such AI technologies will benefit numerous digital human applications, from video conferencing to AR/VR, their successful adoption hinges on their ability to generalize to real-world data. In this talk, I discuss our recent efforts to utilize synthetic data to create AI models for synthesizing photorealistic humans. First, I will discuss how carefully constructed, diverse synthetic data enables the training of a single-view portrait relighting model that generalizes to real images. I will also share our recent efforts on learning to generate photorealistic 3D faces from a collection of in-the-wild 2D images. Furthermore, I will show how such photorealistic 3D synthetic data can be used to train another AI model for downstream applications, such as a single-view reconstruction of photorealistic faces. Lastly, I will address the emerging challenge of verifying the authenticity of synthetic avatars in a world where their legitimate use becomes ubiquitous. Short bio: Koki Nagano works at the intersection of Computer Graphics, Vision and AI. Currently, he is a Senior Research Scientist at NVIDIA. His research focuses on realistic digital humans synthesis, neural media synthesis and trustworthy visual computing including detection and prevention of visual misinformation in collaboration with DARPA. His PhD thesis focused on the topic of high-fidelity 3D human capture (USC, 2017), and he was supervised by Prof. Paul Debevec at USC ICT. Previously he was a Principal Scientist at Pinscreen Inc. During his PhD, he worked for Weta Digital on Hollywood blockbuster movies and for the Meta Reality Labs in Pittsburgh. He got his Bachelor of Engineering from Tokyo Institute of Technology with focus on Environmental Design. |

|

|

Title: Zero-shot/few-shot learning for model diagnosis and debiasing generative models, and its applications to face analysis Abstract: In practice, metric analysis on a specific train and test dataset does not guarantee reliable or fair ML models. This is partially due to the fact that obtaining a balanced (i.e., uniformly sampled over all the important attributes), diverse, and perfectly labeled test dataset is typically expensive, time-consuming, and error-prone. To address this issue, first, I will describe zero-shot model diagnosis, a technique to assess deep learning model failures in visual data. In particular, the method will evaluate the sensitivity of deep learning models to arbitrary visual attributes without the need of a test set. In the second part of the talk, I will describe IT-GEN, an inclusive text-to-image generative model that generates images based on human-written prompts and ensures the resulting images are uniformly distributed across attributes of interest. Applications related to face recognition will be described. Short bio: Fernando De la Torre received his B.Sc. degree in Telecommunications, as well as his M.Sc. and Ph. D degrees in Electronic Engineering from La Salle School of Engineering at Ramon Llull University, Barcelona, Spain in 1994, 1996, and 2002, respectively. He has been a research faculty member in the Robotics Institute at Carnegie Mellon University since 2005. In 2014 he founded FacioMetrics LLC to license technology for facial image analysis (acquired by Facebook in 2016). His research interests are in the fields of Computer Vision and Machine Learning. In particular, applications to human health, augmented reality, virtual reality, and methods that focus on the data (not the model). He is directing the Human Sensing Laboratory (HSL). |

|

|

Title: Biometric Recognition in the Era of AI Generated Content (AIGC) Abstract: In recent years we have witnessed impressive progress on AIGC (Artificial Intelligence Generated Content). AIGC has many applications in our society, as well as benefits diverse computer vision tasks. In the context of biometric recognition, we believe that the AIGC era calls for innovation on both data synthesis and how to leverage the synthetic data. In this talk, I will present a number of efforts that showcases these innovations, including: 1) how to bridge the gap between the training data distribution and test data distribution; 2) how to generate a complete synthetic database to train face recognition models; 3) how to estimate the 3D body shape from an image of clothed human body; and 4) how to manipulate a human body image by changing its body pose, clothing style, background, and identity. Short bio: Dr. Xiaoming Liu is the MSU Foundation Professor, and Anil and Nandita Jain Endowed Professor at the Department of Computer Science and Engineering of Michigan State University (MSU). He is also a visiting scientist at Google Research. He received Ph.D. degree from Carnegie Mellon University in 2004. Before joining MSU in 2012 he was a research scientist at General Electric (GE) Global Research. He works on computer vision, machine learning, and biometrics especially on face related analysis and 3D vision. Since 2012 he helps to develop a strong computer vision area in MSU who is ranked top 15 in US according to the 5-year statistics at csrankings.org. He is an Associate Editor of IEEE Transactions on Pattern Analysis and Machine Intelligence. He has authored more than 200 scientific publications, and has filed 29 U.S. patents. His work has been cited over 20000 times, with an H-index of 74. He is a fellow of EEE and IAPR. |

Tasks

The FRCSyn challenge focuses on the two following challenges existed in current face recognition technology:

- Task 1: synthetic data for demographic bias mitigation.

- Task 2: synthetic data for overall performance improvement (e.g., age, pose, expression, occlusion, demographic groups, etc.).

Within each task, there are two sub-tasks that propose alternative approaches for training face recognition technology: one exclusively with synthetic data and the other with a possible combination of real and synthetic data.

Synthetic Datasets

In the FRCSyn Challenge, we will provide participants with our synthetic datasets after registration in the challenge. They are based on our two recent approaches:

DCFace: a novel framework entirely based on Diffusion models, composed of i) a sampling stage for the generation of synthetic identities XID, and ii) a mixing stage for the generation of images XID,sty with the same identities XID from the sampling stage and the style selected from a “style bank” of images Xsty.

Reference M. Kim, F. Liu, A. Jain and X. Liu, “DCFace: Synthetic Face Generation with Dual Condition Diffusion Model”, in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023.

GANDiffFace: a novel framework based on GANs and Diffusion models that provides fully-synthetic face recognition datasets with the desired properties of human face realism, controllable demographic distributions, and realistic intra-class variations. Best Paper Award at AMFG @ ICCV 2023.

Reference P. Melzi, C. Rathgeb, R. Tolosana, R. Vera-Rodriguez, D. Lawatsch, F. Domin, M. Schaubert, “GANDiffFace: Controllable Generation of Synthetic Datasets for Face Recognition with Realistic Variations”, in Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, 2023.

Registration

The platform used in FRCSyn Challenge is CodaLab. Participants need to register to take part in the challenge. Please, follow the instructions:

- Fill up this form including your information.

- Sign up in CodaLab using the same email introduced in step 1).

- Join in CodaLab the FRCSyn Challenge. Just click in the “Participate” tab for the registration.

- We will give you access once we check everything is correct.

- You will receive an email with all the instructions to kickstart FRCSyn, including links to download datasets, experimental protocol, and an example of submission file.

Paper

The best teams of each sub-task will be invited to contribute as co-authors in the summary paper of the FRCSyn challenge. This paper will be published in the proceedings of the WACV 2024 conference. In addition, top performers will be invited to present their methods at the workshop. This presentation can be virtual.

Important Dates

- 13 Sep 2023 FRCSyn starts

- 30 Oct 2023 FRCSyn ends

- 2 Nov 2023 Announcement of winning teams

- 19 Nov 2023 Paper submission with results of the challenge

- 8 Jan 2024 FRCSyn Workshop at WACV 2024

FRCSyn at WACV 2024: Results

To determine the winners of sub-tasks 1.1 and 1.2 we consider Trade-off Accuracy, defined as the difference between the average and standard deviation of accuracy across demographic groups. To determine the winners of sub-tasks 2.1 and 2.2 we consider the average of verification accuracy across datasets.

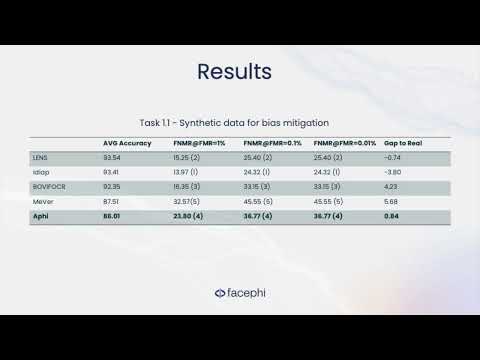

Task 1.1 - synthetic data for bias mitigation

| # | User | Entries | Date of Last Entry | Team Name | Trade-off Accuracy (AVG - STD) [%] | AVG Accuracy [%] | STD Accuracy [%] | FNMR@ FMR=1% | Gap to Real [%] |

|---|---|---|---|---|---|---|---|---|---|

| 1 | lens | 44 | 10/30/23 | LENS | 92.25 (1) | 93.54 (1) | 1.28 (3) | 15.25 (2) | -0.74 (7) |

| 2 | anjith2006 | 15 | 10/30/23 | Idiap | 91.88 (2) | 93.41 (2) | 1.53 (4) | 13.97 (1) | -3.80 (4) |

| 3 | bjgbiesseck | 14 | 10/30/23 | BOVIFOCR-UFPR | 90.51 (3) | 92.35 (3) | 1.84 (5) | 16.35 (3) | 4.23 (9) |

| 4 | ckoutlis | 20 | 10/27/23 | MeVer Lab | 87.51 (4) | 89.62 (4) | 2.11 (6) | 32.57 (5) | 5.68 (10) |

| 5 | asanchez | 6 | 10/30/23 | Aphi | 82.24 (5) | 86.01 (5) | 3.77 (10) | 23.80 (4) | 0.84 (8) |

Task 1.2 - mixed data for bias mitigation

| # | User | Entries | Date of Last Entry | Team Name | Trade-off Accuracy (AVG - STD) [%] | AVG Accuracy [%] | STD Accuracy [%] | FNMR@ FMR=1% | Gap to Real [%] |

|---|---|---|---|---|---|---|---|---|---|

| 1 | zhaoweisong | 68 | 10/30/23 | CBSR | 95.25 (1) | 96.45 (1) | 1.20 (3) | 8.68 (4) | -2.10 (5) |

| 2 | lens | 44 | 10/30/23 | LENS | 95.24 (2) | 96.35 (2) | 1.11 (1) | 6.35 (2) | -5.67 (4) |

| 3 | ckoutlis | 20 | 10/27/23 | MeVer Lab | 93.87 (3) | 95.44 (3) | 1.56 (4) | 9.50 (5) | -0.78 (6) |

| 4 | bjgbiesseck | 14 | 10/30/23 | BOVIFOCR-UFPR | 93.15 (4) | 95.04 (4) | 1.89 (5) | 10.00 (6) | 1.28 (9) |

| 5 | atzoriandrea | 8 | 10/30/23 | UNICA-FRAUNHOFER IGD | 91.03 (5) | 94.06 (5) | 3.03 (6) | 6.85 (3) | -10.62 (2) |

| 6 | anjith2006 | 15 | 10/30/23 | Idiap | 87.22 (6) | 91.54 (6) | 4.32 (8) | 5.50 (1) | -0.65 (7) |

Task 2.1 - synthetic data for performance improvement

| # | User | Entries | Date of Last Entry | Team Name | AVG Accuracy [%] | FNMR@ FMR=1% | Gap to Real [%] |

|---|---|---|---|---|---|---|---|

| 1 | bjgbiesseck | 14 | 10/30/23 | BOVIFOCR-UFPR | 90.50 (1) | 20.83 (1) | 2.66 (3) |

| 2 | lens | 44 | 10/30/23 | LENS | 88.18 (2) | 33.25 (3) | 3.75 (5) |

| 3 | anjith2006 | 15 | 10/30/23 | Idiap | 86.39 (3) | 30.73 (2) | 6.39 (6) |

| 4 | nicolo.didomenico | 5 | 10/29/23 | BioLab | 83.93 (4) | 49.51 (5) | 6.88 (7) |

| 5 | ckoutlis | 20 | 10/27/23 | MeVer Lab | 83.45 (5) | 50.05 (6) | 3.20 (4) |

| 6 | asanchez | 6 | 10/30/23 | Aphi | 80.53 (6) | 46.09 (4) | 9.12 (8) |

Task 2.2 - mixed data for performance improvement

| # | User | Entries | Date of Last Entry | Team Name | AVG Accuracy [%] | FNMR@ FMR=1% | Gap to Real [%] |

|---|---|---|---|---|---|---|---|

| 1 | zhaoweisong | 68 | 10/30/23 | CBSR | 94.95 (1) | 10.82 (1) | -3.69 (3) |

| 2 | lens | 44 | 10/30/23 | LENS | 92.40 (2) | 17.67 (4) | -1.63 (4) |

| 3 | anjith2006 | 15 | 10/30/23 | Idiap | 91.74 (3) | 23.27 (5) | 0.00 (7) |

| 4 | bjgbiesseck | 14 | 10/30/23 | BOVIFOCR-UFPR | 91.34 (4) | 16.51 (2) | 1.77 (8) |

| 5 | ckoutlis | 20 | 10/27/23 | MeVer Lab | 87.60 (5) | 17.10 (3) | -1.57 (5) |

| 6 | atzoriandrea | 8 | 10/30/23 | UNICA-FRAUNHOFER IGD | 84.86 (6) | 39.35 (6) | -27.43 (1) |

FRCSyn at WACV 2024: Top Teams

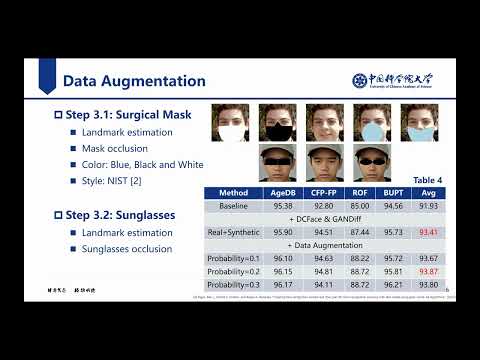

CBSR

Weisong Zhao, Xiangyu Zhu, Zheyu Yan, Xiao-Yu Zhang, Jinlin Wu, Zhen Lei

IIE, CAS, China; School of Cyber Security, UCAS, China; MAIS, CASIA, China; School of Artificial Intelligence, UCAS, China; CAIR, HKISI, CAS, China

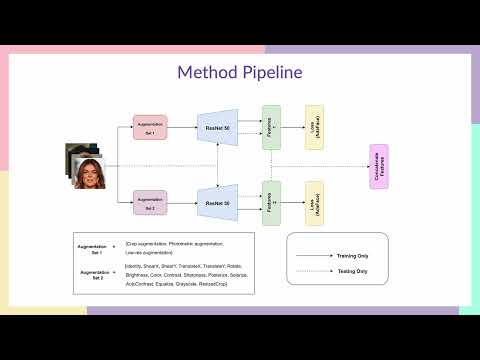

LENS

Suvidha Tripathi, Mahak Kothari, Md Haider Zama, Debayan Deb

LENS, Inc., US

BOVIFOCR-UFPR

Bernardo Biesseck, Pedro Vidal, Roger Granada, Guilherme Fickel, Gustavo Führ, David Menotti

Federal University of Parana, Curitiba, PR, Brazil; Federal Institute of Mato Grosso, Pontes e Lacerda, Brazil; unico - idTech, Brazil

Idiap

Alexander Unnervik, Anjith George, Christophe Ecabert, Hatef Otroshi Shahreza, Parsa Rahimi, Sébastien Marcel

Idiap Research Institute, Switzerland; Ecole Polytechnique Fédérale de Lausanne, Switzerland; Universite de Lausanne, Switzerland

MeVer

Ioannis Sarridis, Christos Koutlis, Georgia Baltsou, Symeon Papadopoulos, Christos Diou

Centre for Research and Technology Hellas, Greece; Harokopio University of Athens, Greece

BioLab

Nicolò Di Domenico, Guido Borghi, Lorenzo Pellegrini

University of Bologna, Cesena Campus, Italy

Aphi

Enrique Mas-Candela, Ángela Sánchez-Pérez

Facephi, Spain

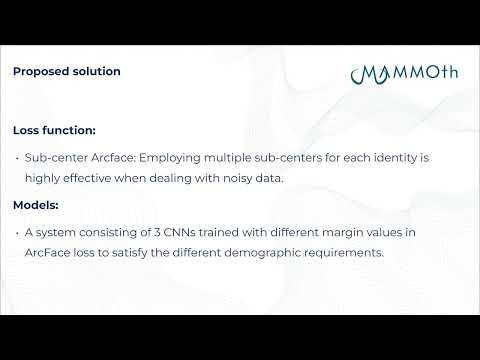

UNICA-FRAUNHOFER IGD

Andrea Atzori, Fadi Boutros, Naser Damer, Gianni Fenu, Mirko Marras

University of Cagliari, Italy; Fraunhofer IGD, Germany; TU Darmstadt, Germany

FRCSyn at WACV 2024: Videos

The video presentations of the Top Teams are available here.

|

CBSR

|

LENS

|

BOVIFOCR-UFPR

|

Idiap

|

|

MeVer

|

BioLab

|

Aphi

|

UNICA - FRAUNHOFER IGD

|

Organizers

Fundings

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|